Art-making through Adaptive Mesh Refinement Algorithms

PRiSM Scientist in Residence: Patrick Sanan

26 March 2024

PRiSM 2021 Scientist in residence Patrick Sanan

In March 2021 we welcomed 2 PRiSM Scientists in Residence Rose Pritchard and Patrick Sanan. We introduced them and their projects here 2021 PRiSM Writers and Scientists in Residence.

Following on from his earlier PRiSM Blog, Local Refinement in Computational Science and Music, here Sanan discusses further how he would utilise adaptive mesh refinement algorithms to help him create a new audio-visual installation work.

Specifically, he discusses how code developed during his residency can be used in a simple way to generate interesting signals, and give a simple example of using such a signal to control synthesis parameters.

A Julia project including a tutorial Jupyter notebook with many more details on this example is available on GitHub, with quickstart instructions to run the included Jupyter notebook.

Art-making through Adaptive Mesh Refinement Algorithms

By Patrick Sanan

Defining the Problem

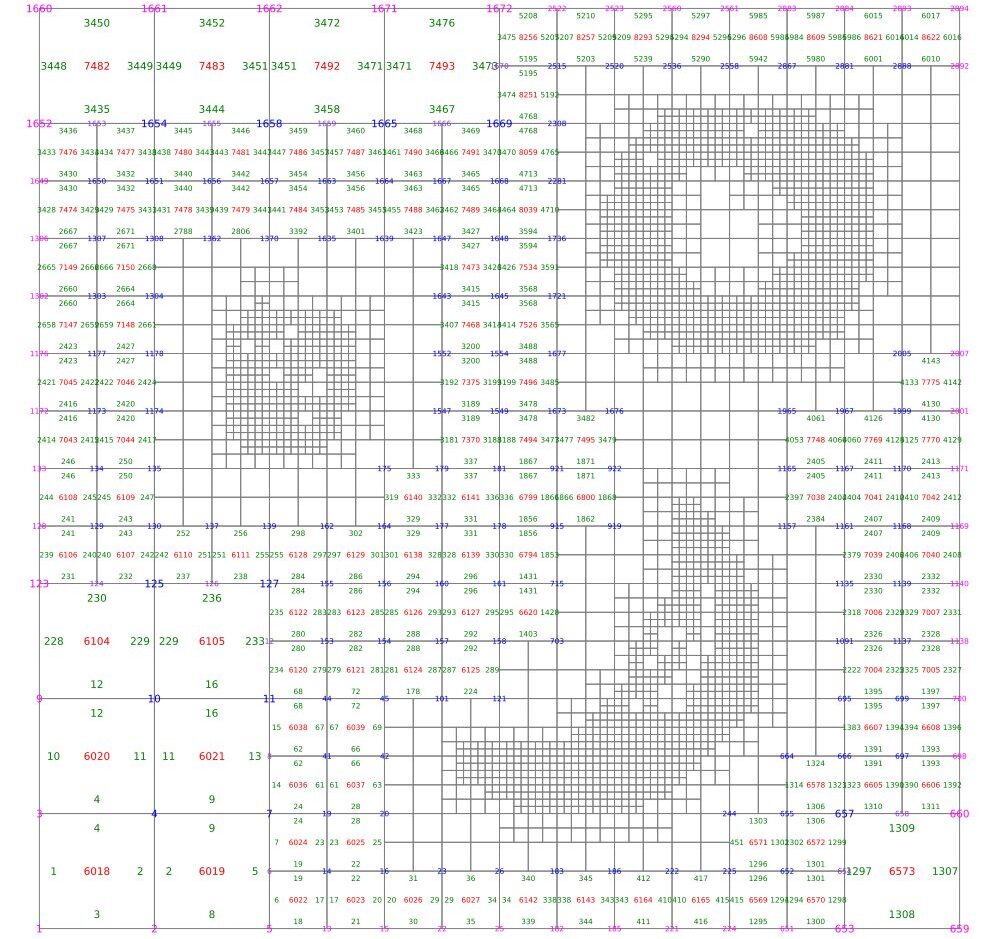

We begin with an arbitrary image, the darkness of which is used to define both density to drive a fluid flow, and regions to be refined in the mesh.

Solving the Equations

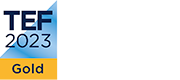

Our software provides a convenient way to leverage the powerful underlying p4est library to refine the unit square and assign degree of freedom (DOF) numbering appropriate for solving the Stokes equations.

We can also solve the equations of Stokes Flow, providing a velocity and pressure field.

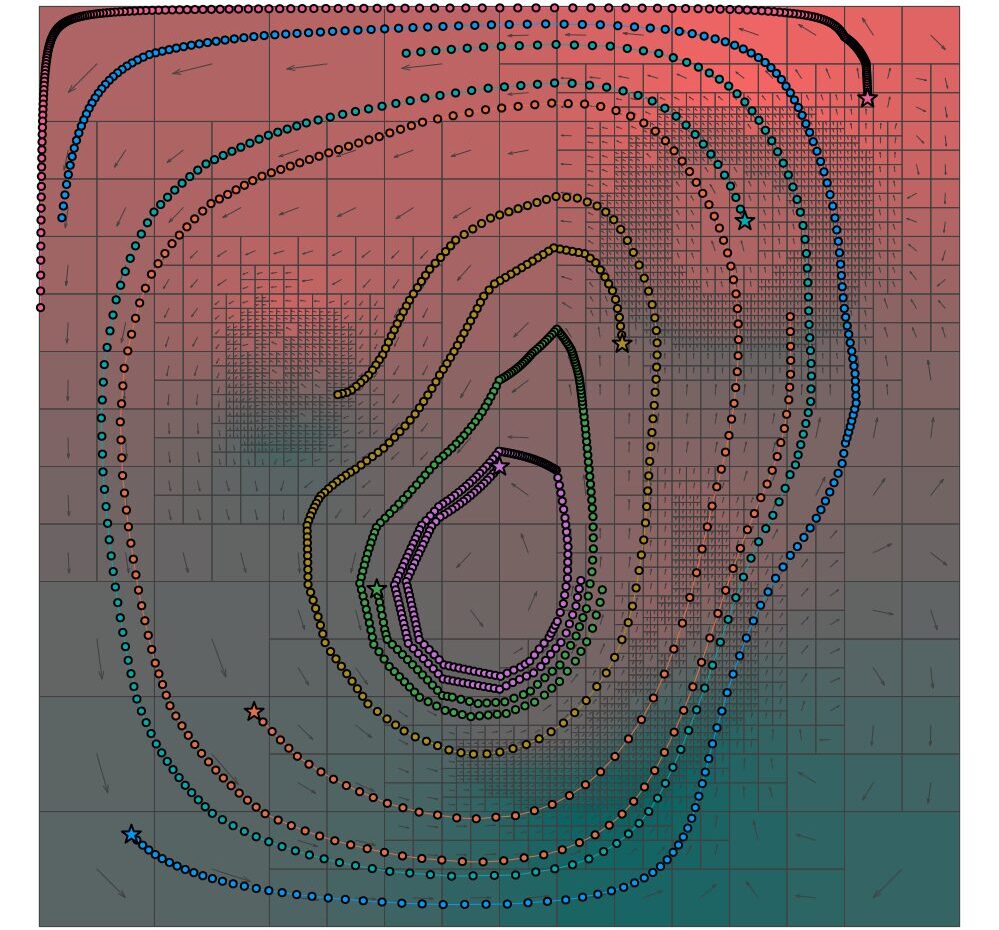

Pushing Particles

A common computational technique is to advect passive particles with the flow.

The experience of each of these particles as it moves through the flow can be traced.

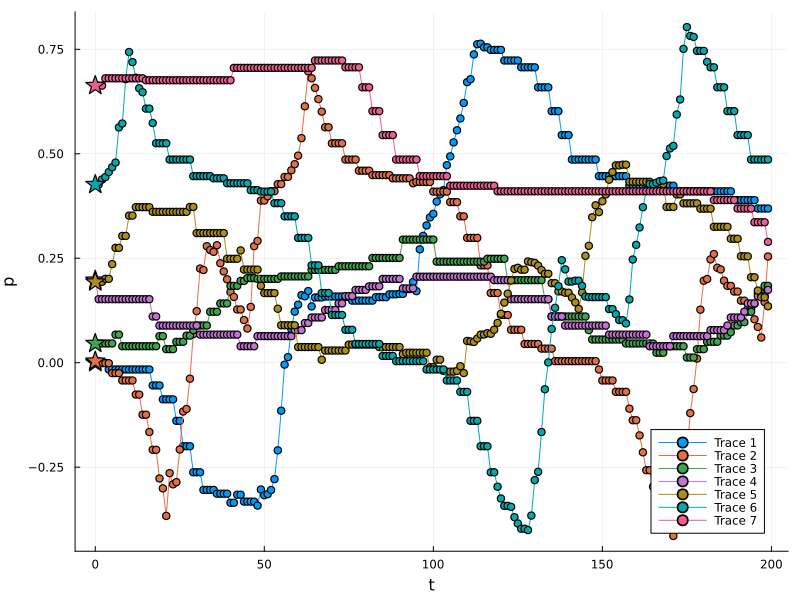

Generating Signals

We can keep track of position, pressure, or other quantities for each particle over time.

Making sound!

Each of these particles can be used to generate a signal used for compositional purposes. As an example, here I create a simple text output. The x position in the plane is used to control pan and and the pressure to control filter resonant frequencies for several synthesizer voices generated with with SuperCollider.

See the SuperCollider source code and listen to the resulting audio!

Ongoing work

The approach here works as well in 3D, and we’re using this as the basis for an audiovisual installation, where information is transported on a 3D non-uniform mesh.

Acknowledgements

Many thanks to Emily Howard, Dave De Roure, Marcus du Sautoy, Chris Melen, and Sam Salem at PRiSM, and to my fellow residents, Rose Pritchard, Abi Bliss, and Leo Mercer. Thanks to the GridAP project for their p4est Julia wrapper and to my collaborators on the GPU4GEO project.

This residency, and its resulting work, are supported by PRiSM, the Centre for Practice & Research in Science & Music at the Royal Northern College of Music, funded by the Research England fund Expanding Excellence in England (E3).