New Collaboration | Adversarial Timbre Composition

RNCM PRiSM researchers to join Dr Oded Ben-Tal (Kingston University) to develop a new software tool for real-time visualisations of timbral features

2 March 2022

Adversarial Timbre Composition is a research project jointly conducted by RNCM PRiSM and Kingston University. Led by Dr Oded Ben-Tal (Senior Lecturer, Music Department, Kingston University), it is co-directed by Professor David Horne (RNCM), Professor Emily Howard (Director RNCM PRiSM), Professor David De Roure (PRiSM Technical Director), and with assistance from Dr Bofan Ma (PRiSM Post-Doctoral Research Associate).

A pilot study leading to an open-source software to be developed by Dr Christopher Melen (PRiSM Research Software Engineer), this project examines how timbral features can be deciphered and therefore displayed graphically, eventually allowing musicians to visualise and interact with the sounds they make in real-time.

The project commenced on 22 February, 2022. Over the course of Research & Development, the team will conduct regular workshops with a number of RNCM student performers and composers. They will also keep regular contact with the students, listening to their feedback on how the system could be improved to facilitate accessibility issues, whilst aiming to maximise its potential for future pedagogical and creative use.

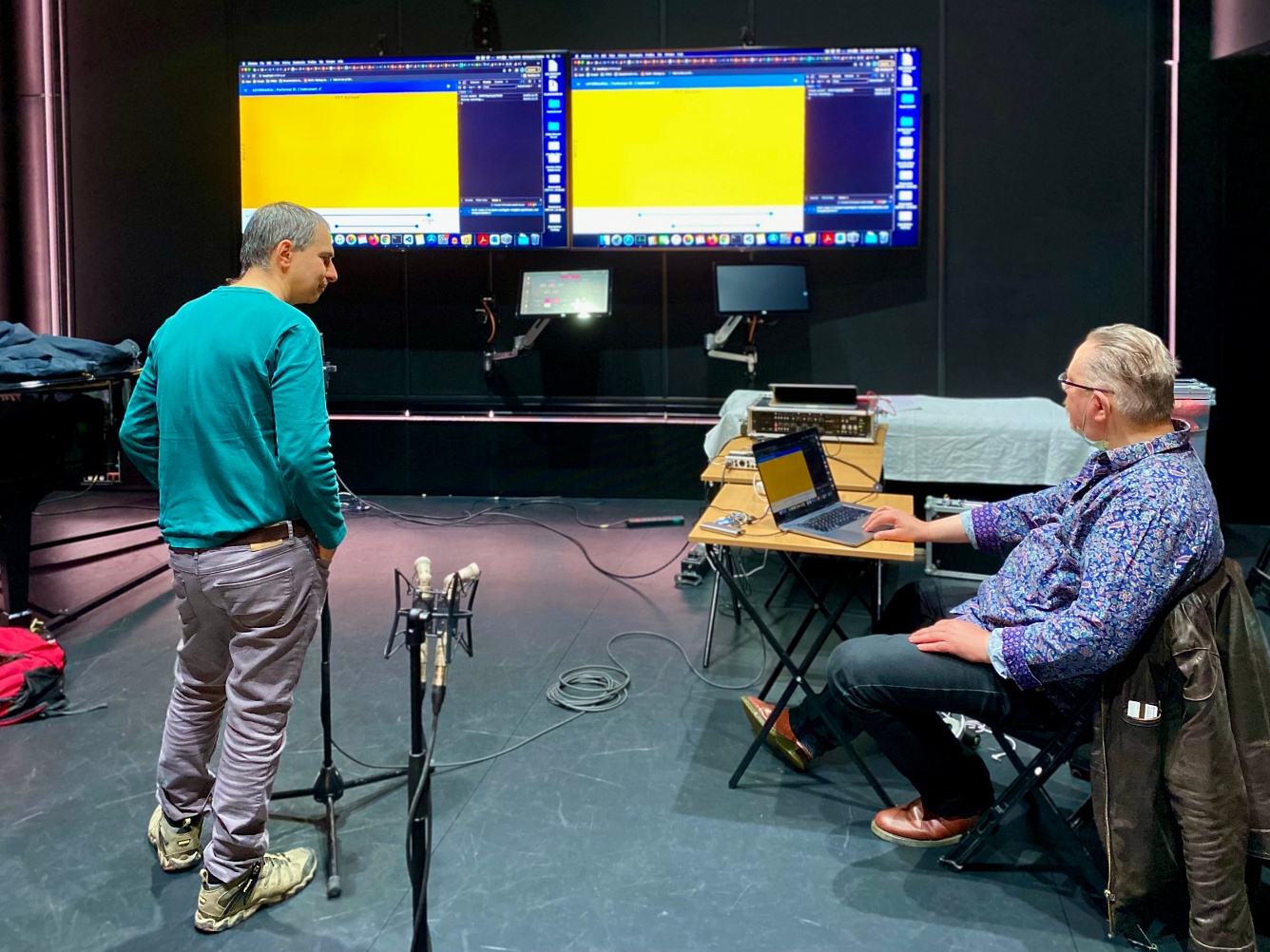

Dr Oded Ben-Tal in conversation with Dr Christopher Melen in RNCM Studio 8

Dr Ben-Tal tells us:

“Timbre has been central to compositional practice for the last 100 years or so. Tools developed for Music Information Retrieval (MIR) offer new possibilities for exploring timbre features as a composition principle.

With the help of Dr Christopher Melen, we developed an interactive tool that extracts timbre features in real-time and displays them graphically. We invited performers to explore their instrumental timbre by looking at their sound as well as listening to it. We only tried a very early version of this system with a few players but what we can say is that what you see is not always what you get.

While sometimes the visualisation validated what our ears were telling us at other times they did not, which raises interesting questions. At times the visual display exposes aspects of the sound that we naturally gloss over – sustained sounds are less stable and static than our perception of them. But in other cases it was not easy to explain the discrepancy. We will be asking ourselves why is that the case as we continue with this research.”

The ATC project team working with RNCM student composers and performers on 22 February 2022, featuring (from top to bottom) Pablo Sonnaillon (double bass), Ana Gomes Oliveira (alto saxophone), Ross McDonnell (tenor trombone), Jack Holmes (bass trombone), and Rachel Stonham (violin).

It was fascinating to observe our performers interact with this new software. While musicians are constantly reflecting on their sound, and its production, this interface afforded an opportunity to consider the relationship with their instrument in new ways.

While sometimes at cross purposes with more conventional means of reflecting on tone and timbre, the setup promoted a deep connection with sound and how the slightest modification to dynamic or intensity could be manifested. It was equally useful to note when changes to pitch or volume did not generate such a visceral visual response, and the performer then found other means of exploring their sound to achieve this.

This tool clearly has potential not just for composers and improvisers, but also for performers to research the sounds they produce in varied and subtle ways.

Professor David Horne, Head of Graduate School, RNCM

This collaboration is also connected to some of the ongoing research projects being conducted at the AHRC-funded research network Datasounds, Datasets and Datasense. The network is directed by Dr Oded Ben-Tal (Kingston University) and Dr Federico Reuben (the University of York), with partners including Professor Emily Howard (RNCM PRiSM), Dr Robin Laney (the Open University), Professor Nicola Dibben (the University of Sheffield), Professor Elaine Chew (IRCAM), and Dr Bob Sturm (the Royal Institute of Technology, KTH).