AURA MACHINE

Machine Learning & Musique Concrete

by Vicky Clarke

29 Oct 2021

Still from AURA MACHINE, premiered at PRiSM Future Music 3

1_INTRODUCTION

As Artist in Residence with NOVARS, University of Manchester, I’m exploring the collision of musique concrete and machine learning, working in collaboration with PRiSM as part of UNSUPERVISED, the Machine Learning for Music working group, which brings together PRiSM and NOVARS. In this article I will share some insights into the process of working towards my first neural synthesis piece, AURA MACHINE, which was featured at RNCM PRiSM FutureMusic 3 event in June 2020.

I am a sound and electronic media artist working with sound sculpture, DIY electronics and human-machine systems to create performances and sound objects. I like to explore the sonic materiality of our technologies and consider our agency within seemingly autonomous systems.

I’d been interested in creative AI for some time, having undertaken a course on the technical history of AI at the University of Karlsruhe and a research trip to St Petersburg and Moscow as part of the British Council UK-Russia Year of Music, where I met musicians and technologists working within this emerging field of sonic AI.

I was aware of tools for pattern generation regarding midi, but it was here that I first heard audio outputs produced via neural synthesis algorithms and became interested in applying this approach to my work with materials and musique concrete – sparking questions such as ‘if a machine can generate new sonic materialities, what happens to the authentic matter when processed by a model’?

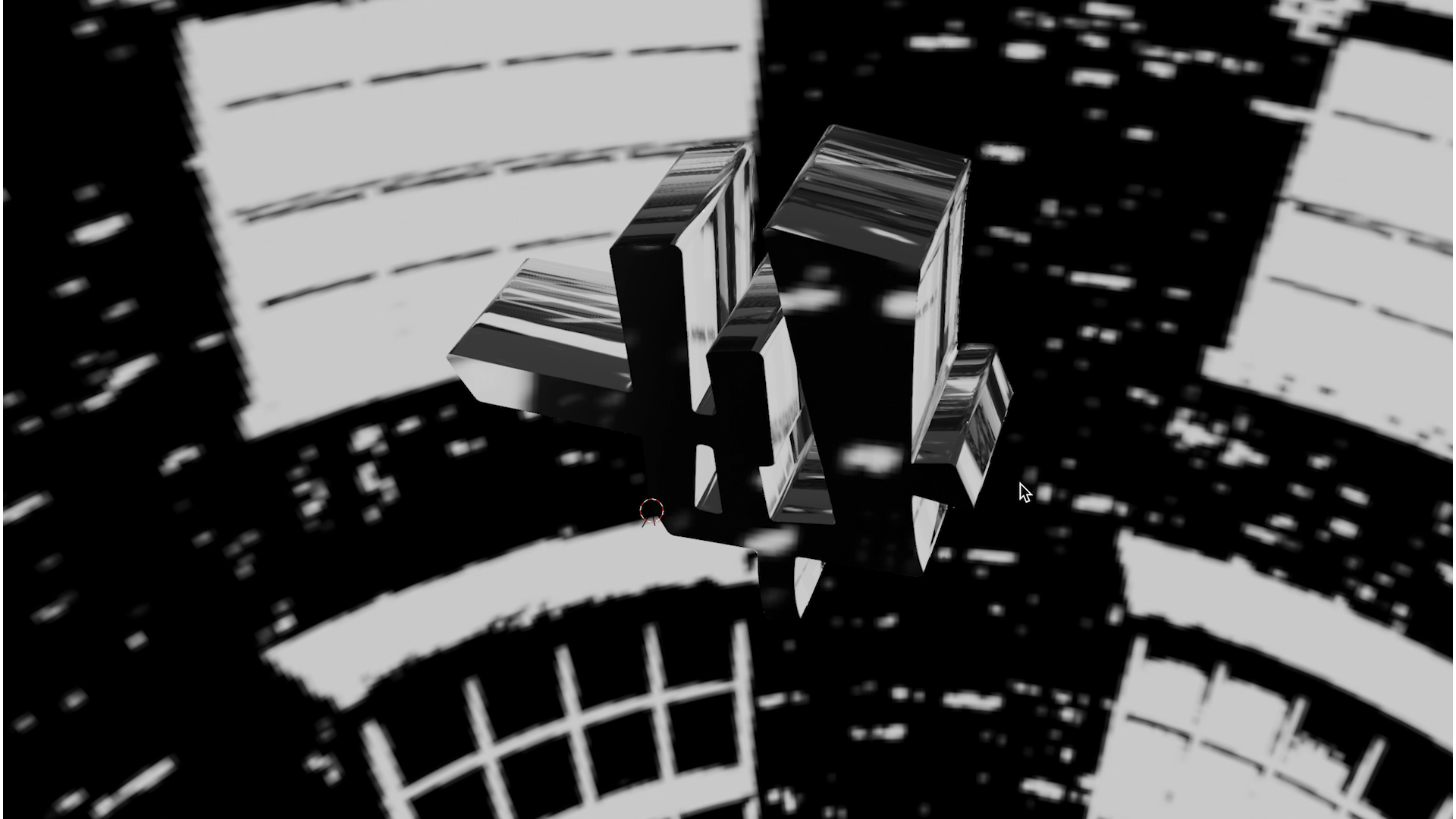

Approaching NOVARS and PRiSM on my return to the UK was a natural progression to test these questions, using the code prism-samplernn, with the research provocation “How can concrete materials and neural networks project future sonic realities?”

NOVARS research residency question

My practice involves exploring materials through sampling techniques with recorded or archival sound and sculpture – I’m fascinated by acousmatic sound drawing heavily on the transformational nature of musique concrete. This manipulation of recorded sound, makes us consider the very texture of the material itself, its potential and wider questions around sonic atoms, form and matter. The residency builds on my previous research project MATERIALITY, where I built a live system focused around industrial musique concrete, amplified metal sound sculptures, drum machines, samplers and a conductive gestural interface made from graphene, built through collaboration with the National Graphene Institute. My key areas of critical exploration are as follows:

DEFINING SONIC MACHINE LEARNING_Considering the technological lineage of tape experiments of Schaeffer and GRM and the Radiophonic workshop, could prism-samplernn be a new electronic tool for concrete music, utilising algorithmical and statistical manipulation of recorded material instead of tape. And… just what is this technology? Not simply a tool, but a sound generation source, new method of sampling and/or potential collaborator? Is neural synthesis a new tool for contemporary Musique Concrete?

SOUND MATERIAL WITHOUT CONTEXT_Thinking about abstraction and acousmatic sound – concrete music removes the context and origin of sound matter, radically questioning conceptions of what can be deemed music. A machine learning model has by default no understanding of context or beauty, it doesn’t know it is ‘listening to glass’ and is therefore aesthetically unbiased. If the generated audio from a machine is acousmatic by nature – what sounds will it produce and will we deem these beautiful or even musical? What exactly does this sound like?

ARTISTIC AUTONOMY_Where is the hand of the artist within the system of neural synthesis? Which parts of the system do we have autonomy, influence and input? What does this mean for technological collaboration, how do we compose and perform live with this material?

TECHNOLOGY | ACCESSIBILITY_Machine Learning is an incredibly complex field, known for it’s ‘black box’ and ‘hidden’ multiple layers of neurons. As an artist how can I begin to attempt to understand these systems, share process and demystify the tools and platforms so others can viably use these for music making?

SAMPLING_ What does this technology mean for sampling and what can the machine make that I can’t?

2_BULDING A CONCRETE TRAINING DATASET

Data classes from the Concrete Training Dataset

To work with prism-samplernn I began to build my concrete training dataset, a detailed and intensely focused sampling experience requiring a minimum of ninety minutes of material of individual sound objects for training. In order for the model to generalise and predict there is a process of statistical clustering and feature extraction, this helped me to decide on distinct classes of data:

- AGE OF ELECTRICITY_Recordings of noise, DIY electronics and electromagnetic frequencies

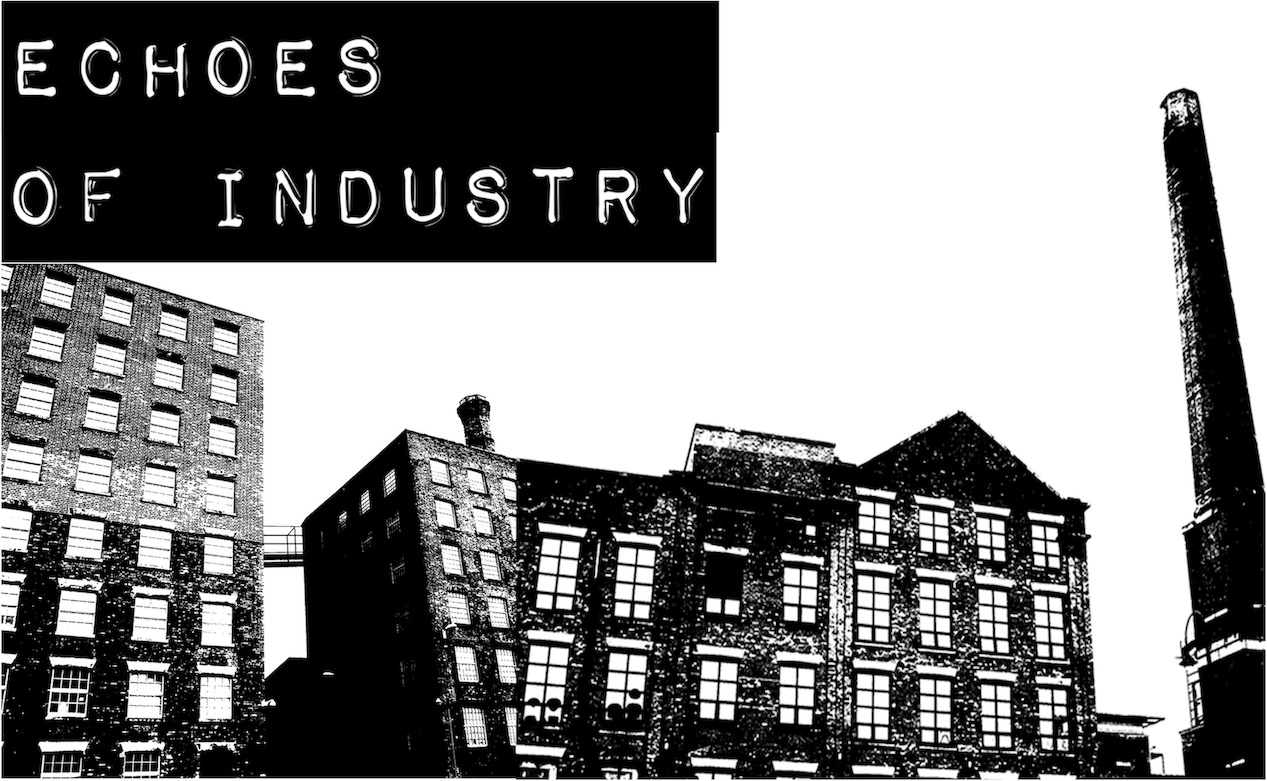

- ECHOES OF INDUSTRY_Recordings of Manchester mill spaces, tools and machinery

- MATERIALITY_Object recordings of metal sound sculptures and glass fragments

Throughout the process I was guided by Pierre Schaeffer’s ‘Notes on a Concrete Music’, reading again his experiments of sound object extraction and reflections on matter and form. In tandem I was reading ML textbooks on what comprises a ‘good’ dataset. These texts informed my workflow twofold, on the one hand trying to create a varied and distinct dataset but in other ways ‘challenging the machine’ and forcing errors. One example of this was with noise. My reading had told me to eliminate noise (in a statistical sense) wherever possible, also my trip to Russia had taught me that a neural synthesis model would be able to recognise attacks more than drones, events more than textures. As an artist who works with noise I wanted to test this and used noise purposefully within my dataset, picking particular machine timbres, pitches and electromagnetic textures which on reflection became the grounding of the sonic palette on output.

MATERIALITY DATASET EXAMPLE

Video: Glass fragment extraction, using a range of glass from National Glass Museum Sunderland

CONCRETE DATASET RULES

Throughout sound object extraction I worked to a set of self-directed rules for the dataset:

- ORIGINAL RECORDINGS: All recordings are of known material origin and self recorded

- EXTRACTION: Activate objects and spaces to uncover a wide range of resonances and frequencies with a variety of attacks, drones, durations and pitches.

- RECORDING: Contact mics, electromagnetic detectors, consideration of depth and space, different amplitude levels.

- NO MELODIC OR FORCED RHYTHM: Concentrate on the texture of the sonic fragments

- RAW MATERIAL: No effects added to the material or over production

- NOISE: a) reduction on MATERIALITY fragment recordings and b) purposeful inclusion of noise within ‘age of electricity’ machine dataclass

ECHOES OF INDUSTRY DATASET EXAMPLE

An ode to my hometown of Manchester, birthplace of the industrial revolution and a city defined by music, mills and radical politics, I wanted to capture the aura of these mill spaces, once used for textiles and industry – then occupied by music and art – and presently increasingly at risk from property developers and gentrification. One mill space that isn’t under threat and is a shining light for dynamic and subversive art is Salford’s Islington Mill, an ever evolving creative space, arts hub and community. The mill is currently at an exciting stage of its 200 year history with a transformative building renovation following huge fundraising efforts from the Mill community.

I was privileged to gain access to the legendary 5th floor space, days before this part of the building was closed and a new chapter began as building work started. Between this space, Wellington House Mill in Ancoats, and Kunstruct’s workspace at Rogue Studios, I recorded the sounds of machinery, tools, paper, slate, discarded pianos, ambient and electromagnetic recordings, contact mic recording of pipes and cavities, mechanism sounds of industrial hoists and most importantly spent time with the mill custodian pigeon dwellers. These recordings were imbued with a sense of place, emotion and industry, could a machine generate a sense of space and heritage?

One key observation in building the dataset was around labour, the perception that machine learning simply automates everything for you, I found to be misplaced, My experience of creating the dataset was so completely human, selective and labour intensive with the training data being the bedrock of the process and a space where the artist has complete control. I experienced a sensation of mirroring the machine, and through learning how the ML model trains was directly influencing my workflow and approach the building of the dataset methodically. I began to categorise and label, trying to predict or second guess the features it might detect and extract, whilst also disrupting this to force errors and unexpected outcomes in my choice of sound object.

3_LISTENING TO & COMPOSING WITH AI

“But the miracle of concrete music .. is that.. things begin to speak by themselves, as if they were bringing a message from a world unknown to us and outside us” Schaeffer, Notes on a Concrete Music

Following training with the prism-samplernn code, working with PRiSM Research Software Engineer Dr Christopher Melen, and also from my cloud based training experiments on Google Colab notebook, I started working with the ML generated material. What was I listening for? Errors, unique ‘blends’ of material, events and tones; anything unexpected in terms of new materiality, some alchemy previously unheard. What would the machine recognise in the data that I couldn’t. The listening process involved going through audio from many epochs of training. As sole creator of the dataset I knew in intricate detail the sound objects comprising the input, which meant that as a listener I was automatically prone to try to identify particular sounds, so there was an effort to retain impartiality in order to identify these new forms.

MATERIAL REFLECTIONS_

I treated the material as I would approach working with any audio content, going through a process of listening, analysis and being guided by what the material suggests tonally, rhythmically or in terms of events. This final process of human classification and audio fragment extraction again mirrored some processes of the ML model itself, looking for patterns and common features to ‘make sense’ of the audio data, categorising and classify sounds such as ‘data falling downstairs’ (extremely harsh extended metallic scraping sounds) and ‘machine wind’ (whistling and ambient tonal atmospheres).

Video: First prism-samplernn output, AURA MACHINE

There was a distinct air of the uncanny working with this material and within this compositional headspace, as I kept reminding myself that this new material, being both sound source and collaborator was not the sounds of objects, or sited in a recording; but a statistical prediction and machine generated sound object. Around this time I was reading about early alchemical experiments and found there to be many parallels and analogies between the ideas of transference of state, transmutation of matter and the hidden layers of multiple neural networks. In the above clip, my first ML sample, you can hear some of this machine alchemy creating textures I didn’t expect or could create as a human. Here I can determine some origin sounds of crushed glass and electromagnetic noise, plus the attacks of tools and the suggestion of imagined machines switching on and off. The way in which the events occur and textures morph in and out of each other creating new forms and improvisational architectures. I have been impressed with the articulation of the very quiet audio too, the model detected delicate handling noise from the field recorder which I loved.

4_AURA MACHINE – FIRST NEURAL SYNTHESIS EXPERIMENT

Video: AURA MACHINE 10 minute AV piece

“A sound object has an aura. Can a machine produce an aura? Taking the starting point of the sound object, a sonic fragment or atom of authentic matter, what happens to this materiality when processed by a neural network? What new sonic materials and aesthetics will emerge? Can the AI system project newly distilled hybrid forms or will the process of data compression result in a lo-fi statistical imitation?” Aura Machine abstract, Unsupervised RNCM PRiSM FutureMusic 3.

The piece takes its name from the Walter Benjamin essay ‘The Work of Art in the Age of Mechanical Reproduction’ questioning the aura of an object and its authenticity when reproduced. I thought it interesting to consider this from our 2021 creative AI context, and apply this to sonic aesthetics so my piece asks “What happens to the sound object when processed by a neural network”? Interested in exposing hidden systems and technological processes, for this first experiment the form of the piece sought to guide the listener through the process of training a neural network:

MUSIQUE CONCRETE [INPUT] >> TRANSMUTATION [TRAINING] >> AI GENERATED AUDIO [OUTPUT]

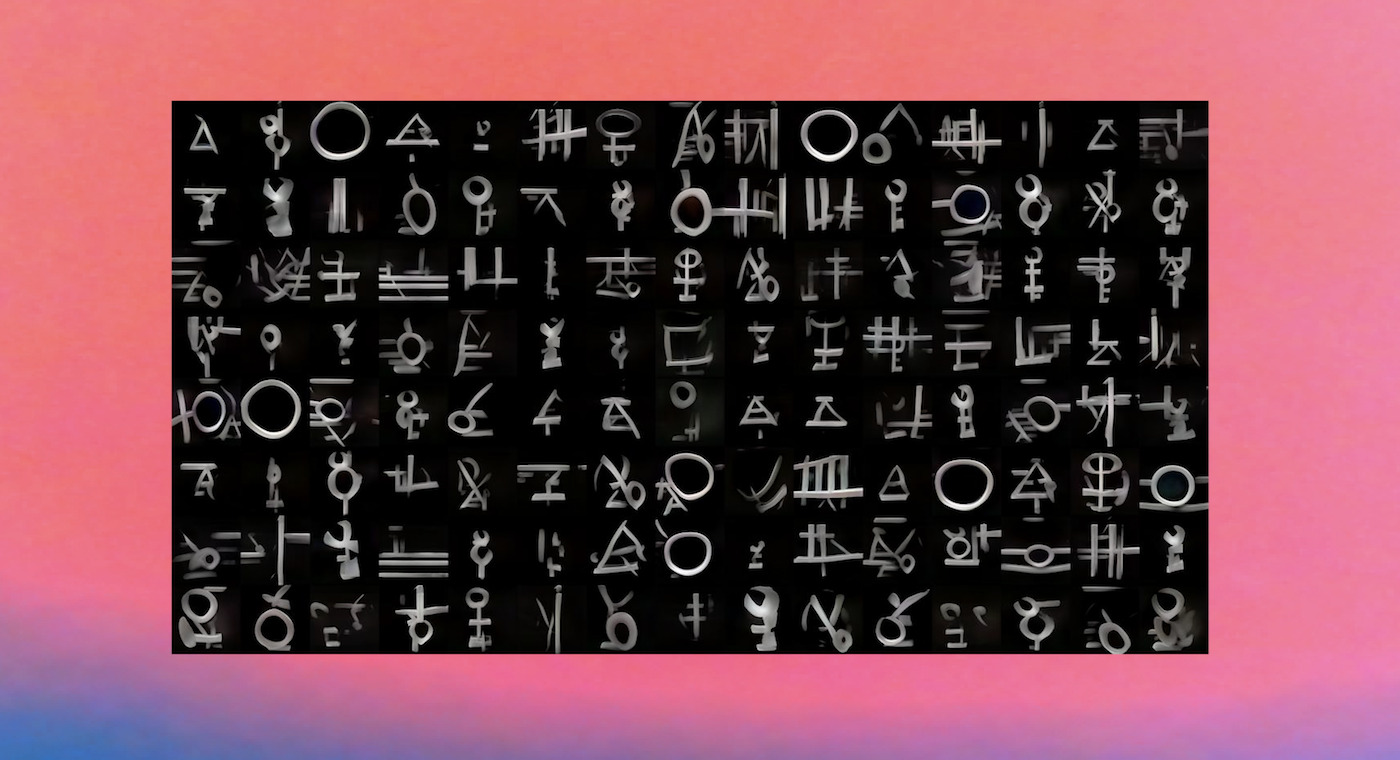

AURA MACHINE Symbolic language,. StyleGAN trained on dataset of electronic circuit and alchemical symbols

I purposefully left the output AI material as pure as possible for the listener to really hear the textural makeup of the samplernn audio – to witness the changes of state (and low sample rate) that had occurred through the system.

The genuineness of a thing is the quintessence of everything about it since its creation that can be handed down, from its material duration to the historical witness that it bears.” The Work of Art in the Age of Mechanical Reproduction, Walter Benjamin

So can a machine produce an aura ? I would say yes. I was enchanted by the lo-fi sonic world of the output material, I felt it had a distinct almost analogue warmth and depth ironically reminiscent of early musique concrete tape recordings. This ‘lofi-ness’ is due to the low sample rate (16K) required for training the model and can be a challenge – I however decided to embrace the feel of this for the piece. Having just begun working with this material, I intend to undertake more experiments and analysis but at this stage I know that the aura of the soundworld and my connection to the material has captivated my sense of the electrical imaginary.

The process definitely created new artefacts, blended material atmospheres and improvised collaged architectures which for myself pose exciting questions for composition. These features were not pale imitations or reproductions of the training data but held their own space and authenticity as new object forms and projections.

My next steps are to develop a live set for the piece, and will be performing a version of AURA MACHINE with live visuals at the Science and Industry Museum this October. I received support from Arts Council of England to continue my research residency with NOVARS in collaboration with PRiSM, build new sound sculptures for training (these forms are inspired by the visual ML generations) and develop a creative AI education project for young women with Brighter Sound.

A special thank you to NOVARS and PRiSM, Professor Ricardo Climent, Dr Sam Salem and Dr Christopher Melen for their ongoing support in this research and to Arts Council England, European Art Science Technology Network for Digital Culture and Manchester Independents for making this possible.