offset iii – what makes human human?

9 October 2020

Introduction

PRiSM Doctoral Researcher Bofan Ma talks about his collaboration with Professor Keeley Crockett, Professor in Computational Intelligence at Manchester Metropolitan University, and the resulting project offset iii.

Where Did it Start?

I consider myself privileged to be living in the age of AI and machine learning. I like how deeply the technology is now embedded in my daily routine. I have got used to letting software deal with my numerous typos, unlocking my phone simply by looking at it, and listening to music my streaming services ‘think’ I would like. This is all bizarrely fascinating, given how quickly it has happened. This lifestyle adapted before I could even start to think about it critically. I hardly have any recollection of the bewilderment I felt when I was first asked by a webpage to confirm my human status.

I only started to notice how much my outlook and creative practice is impacted by technology after getting to know Keeley’s work. We first met in 2017 when she and her team were developing the project ‘Silent Talker’; a camera-assisted, lie detecting AI system that analyses and correlates non-verbal behaviours with pre-registered data, to be used in a border-crossing scenario.

I asked Keeley in our first meeting: “How do you want my music to complement the system?” She said “I would love to see how music encourages people to look at AI technology more positively.” Being a huge fan of the series Black Mirror, I laughed. “The system is completely non-invasive,” she added, “it is designed to help increase accuracy and efficiency, whilst reducing the risk of subjective human error. Perhaps it is this complementary relationship between human and machine that we could explore here?”

What a brilliant start to a music-science collaboration!

What is Silent Talker?

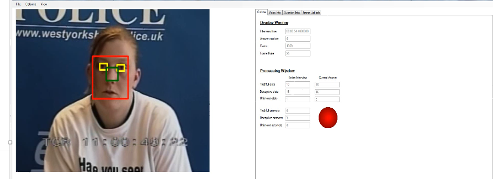

Silent Talker (Figure 1) is an Adaptive Psychological Profiling system using Artificial Intelligence (AI) to monitor and analyse visual non-verbal behaviour (NVB) in humans in two domains; lie detection and comprehension. Artificial neural networks detect subconscious, non-verbal micro-gestures from the face, and analyse patterns to determine psychological states through “micro-gestures”. A micro-gesture is very fine-grained NVB such as the left eye changing from fully-open to half-open). They are measured over a number of facial feature channels for a time slot.

Figure 1: Screenshot of Silent Talker. Source: The Lying Game: The Crimes That Fooled Britain (42m 20s) Shine, ITV, 2014

Silent Talker was used as a component in the European Union’s Horizon 2020 project iBorderCtrl (Grant: 700626), as part of an Automated Deception Detection System (ADDS) to support human decision making by border agents. ADDS provides an assessment of estimated level of deception, based on analysis of an automated interview session (2-3 minutes) conducted by an avatar border guard as part of the process of advanced traveller registration (figure 2). The use of ADDS has led to new research in the field of practical ethical artificial intelligence.

Figure 2: Avatars displaying Neutral, Sceptical and Positive attitudes

What Makes Human Human?

Keeley demonstrated how the camera system tracks facial muscles and matches the results with a dataset, before identifying significant occurrences. She explained how this process differs from facial recognition. It took me a while to be able to distinguish the nuance between these muscle movements and facial expressions, let alone the emotions implied. I was intrigued by the vocabulary and logistics behind the system’s design and operation. It was all new to me, but it weirdly resonated with many things I have always wanted to explore musically.

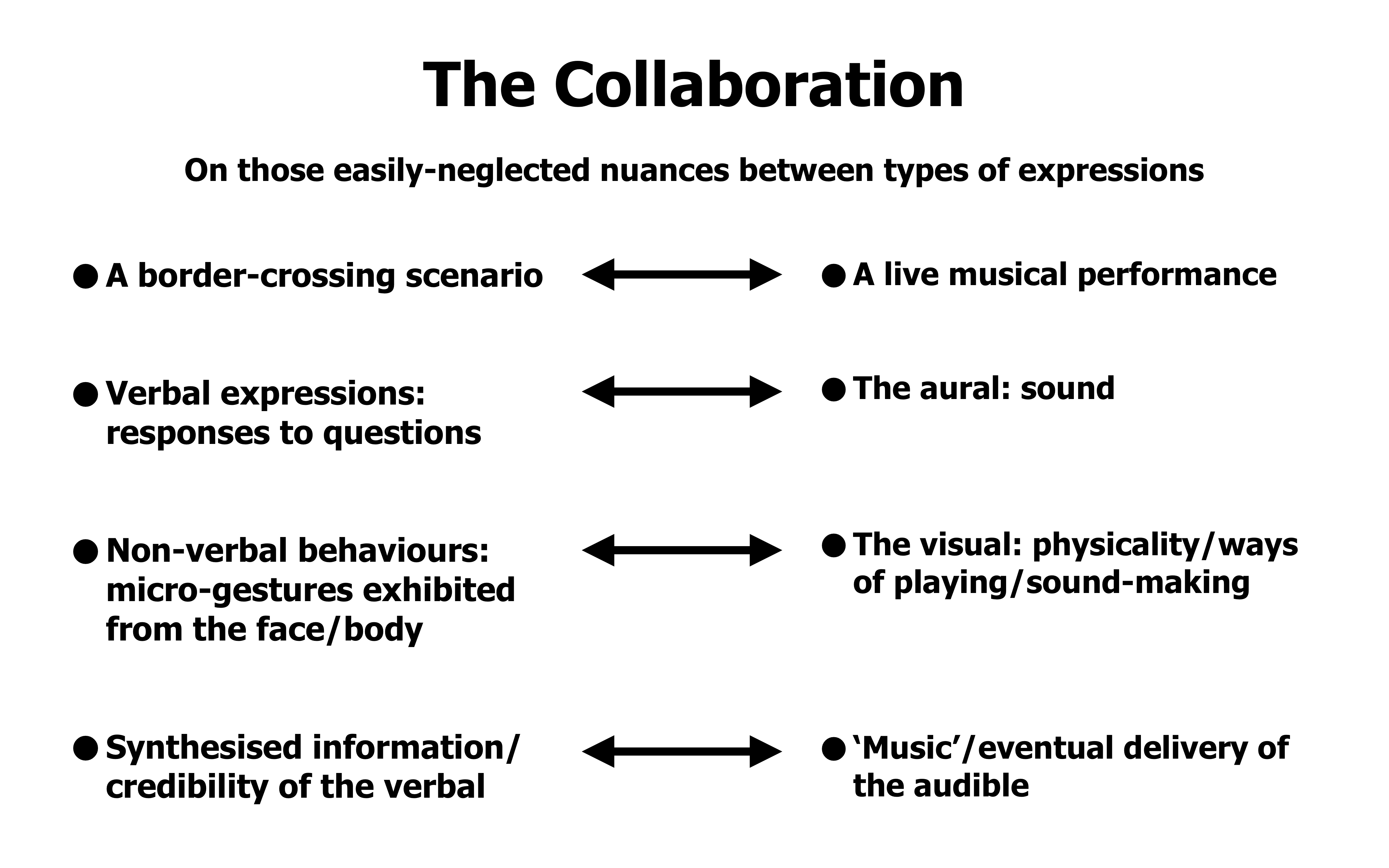

I started to wonder – what makes human human? I began my doctoral research presuming that the visual elements embedded in a live music performance projected as much information as the aural. These visual elements – as vague as they seemed at the time – refer largely to musicians’ presence on stage whilst playing their instruments. That said, what if I draw a parallel between such a performance and a border-crossing point? What are the musical equivalents of the verbal and the non-verbal, when such a performance takes place (figure 3)? Are there peculiar things that often get overlooked simply because they do not hinder a conventional instrumental practice? What if I disintegrate performance gestures before reconfiguring them? Can they be sufficiently and accurately captured as if being monitored by ‘Silent Talker’?

Figure 3 – parallels between Bofan’s and Keeley’s research

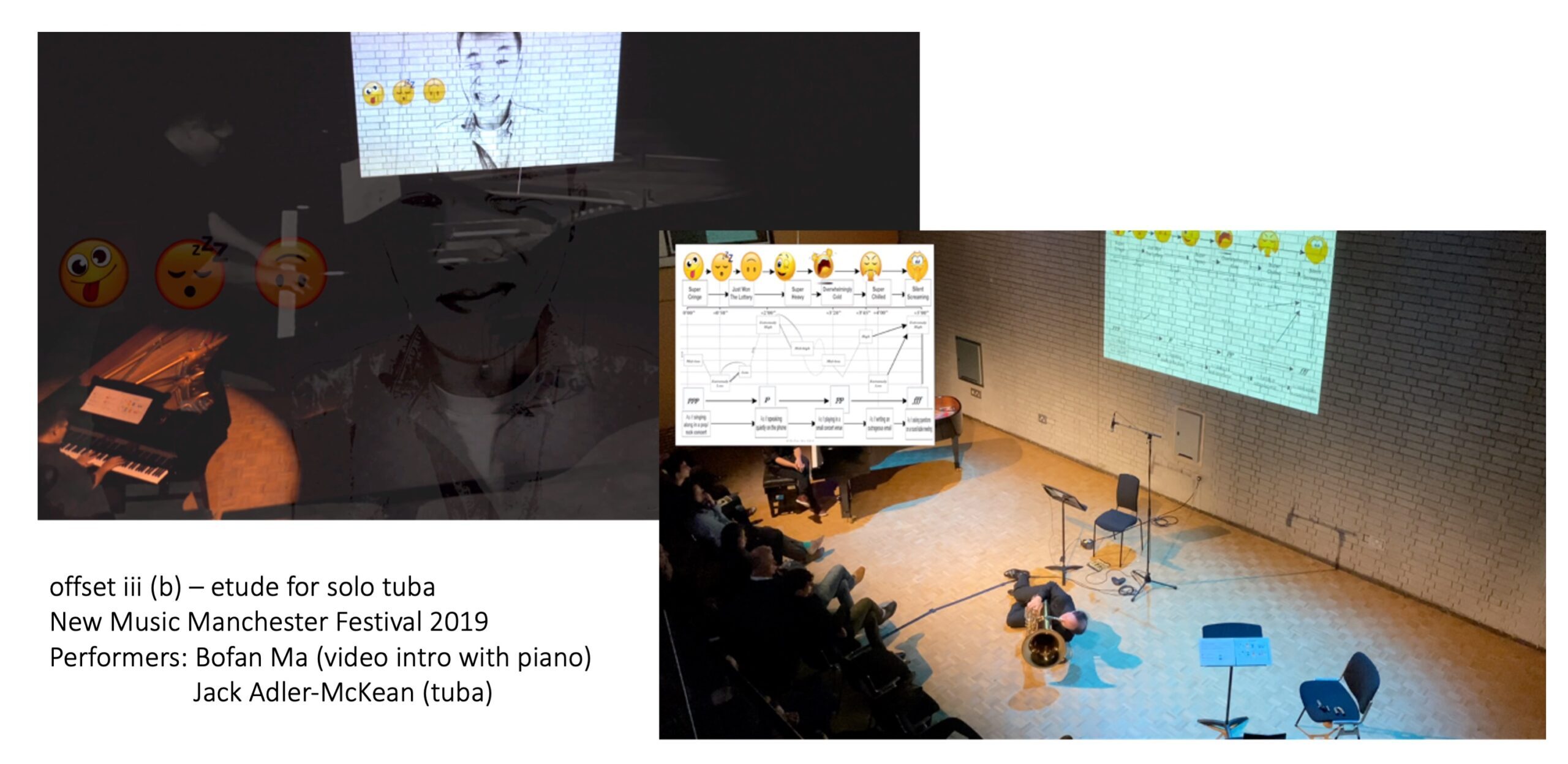

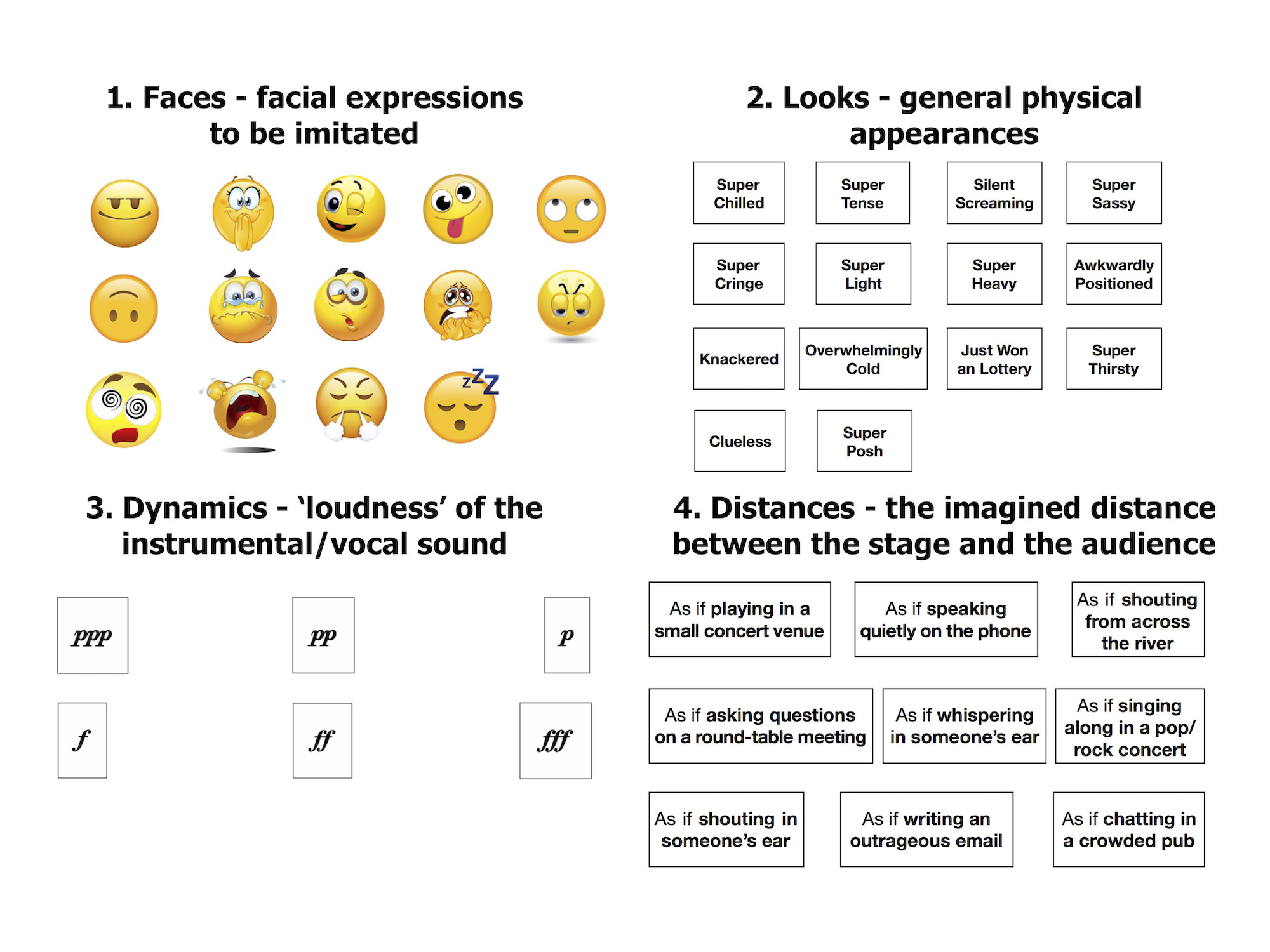

My discussions with Keeley continued, I composed offset iii – etude (2018) consisting of two self-contained pieces; one for 2-6 performers on any instrument/voice type, and one for solo tuba. In both pieces, four performance criteria are decoupled from the conventionally notated music:

- performers’ facial expressions;

- performers’ body gestures;

- dynamics of instrumental playing/singing;

- an imagined distance between the stage and the audience.

These four criteria are referred to as ‘Faces’, ‘Looks’, ‘Dynamics’, and ‘Distances’ in both pieces. Each of the four criteria can be approached with a number of options listed in the performance notes (figure 4). For example, ‘Faces’ are represented by a selection of emojis; whereas ‘Looks’ and ‘Distances’ are verbal descriptions, incorporating a playful and metaphoric vocabulary.

Figure 4: The four criteria and ways to approach them

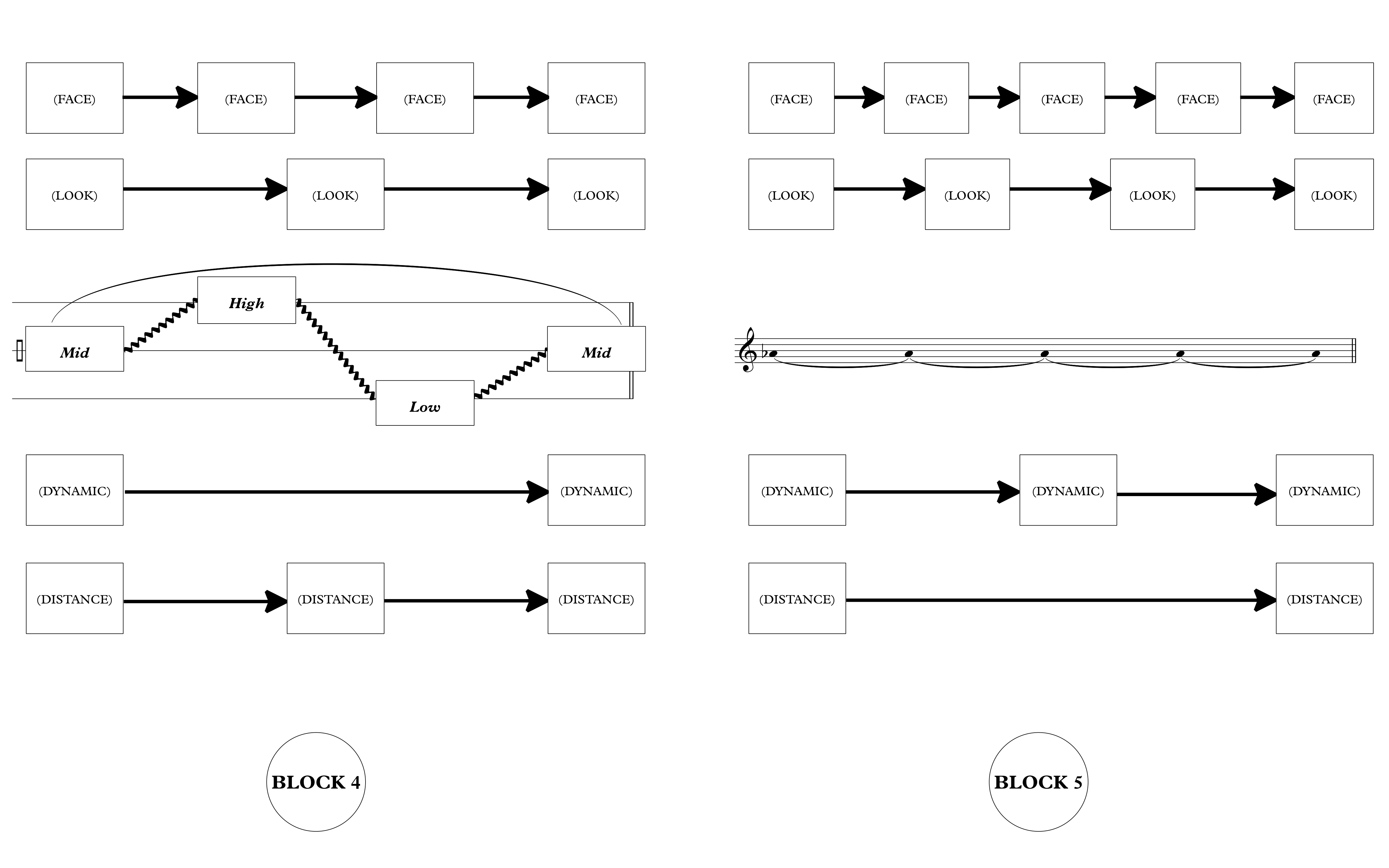

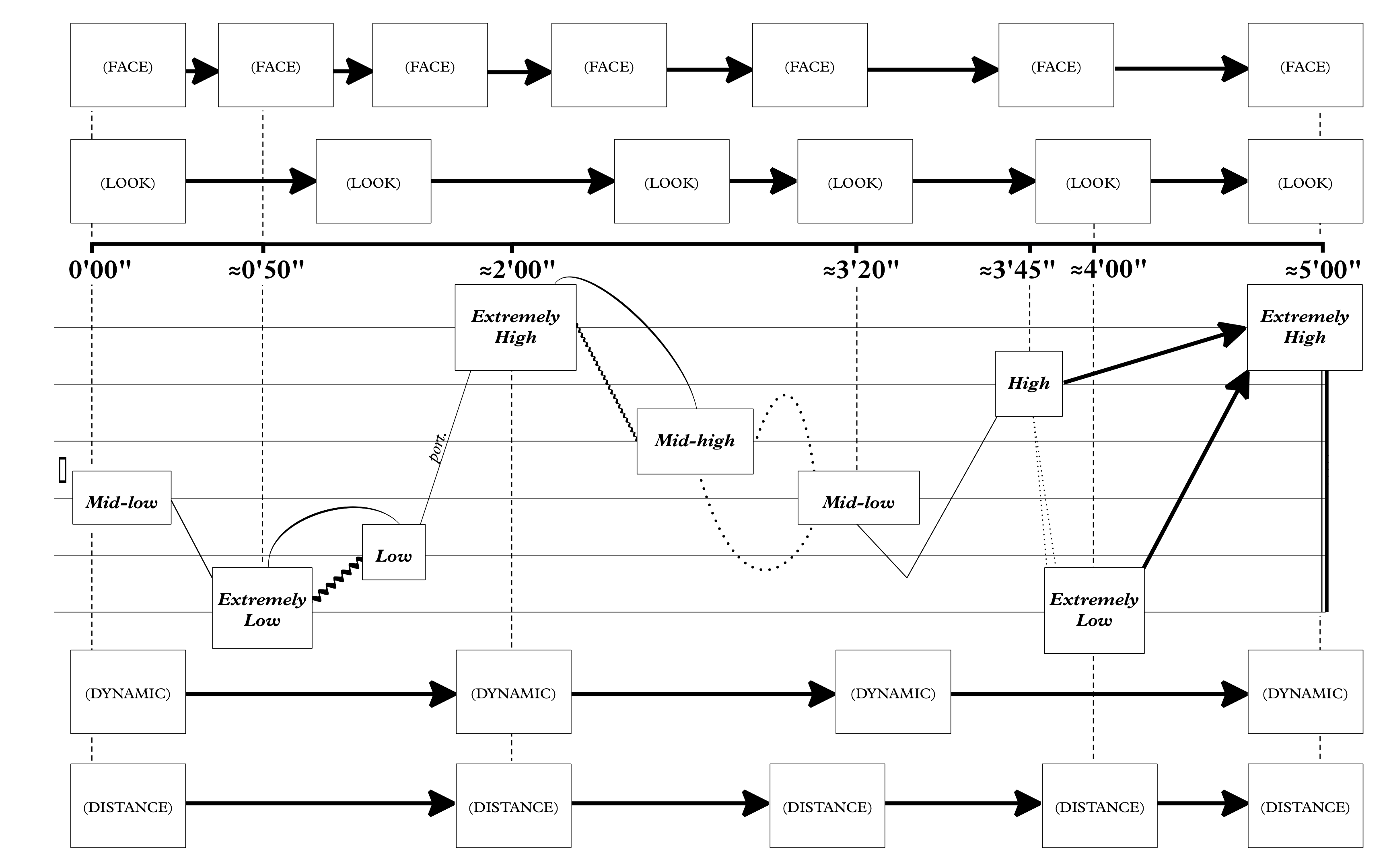

The performers are asked to reconfigure their own parts by randomly selecting and combining options under each and every criterion, before sticking them onto designated boxes shown on the score (figure 5 and 6). Once the parts are assembled, everything in display (the four criteria and notated music materials) is to be executed simultaneously throughout the performance.

Figure 5: Score excerpt of offset iii – etude

Figure 6: Score excerpt of offset iii (b) – etude for tuba

The ensemble version was premiered in 2018 as part of PRiSM 8-cubed during British Science Week. The tuba version was brought to Darmstadt, Germany that summer by tubist Jack Adler-McKean, and subsequently to INCÓGNITO #2 at New Music Manchester Festival in 2019.

offset iii – etude (PRiSM 8-cubed rehearsal). Performers: Hannah Boxall, Simeon Evans, Aaron Breeze, William Graham

offset iii – etude (PRiSM 8-cubed performance)

The Future

Bofan: Collaborating with Keeley has profoundly influenced my creative practice to this day. I have gained further understanding of my original research questions. I have been able to dialectically address issues around these questions. I have begun exploring the value of digital content, hashtag politics, stereotypes, as well as a general placement/displacement of the self in my latest work. So what makes human human? It is perhaps a constant and active engagement with contrasting culture, politics, ideologies, as well as new technology. I hope that this collaboration has not come to an end. We will continue to look for opportunities to push it further, possibly creating a large-scale work that utilises ‘Silent Talker’ as a performative feature. I sincerely look forward to it.

Keeley: This partnership has brought a new dimension to my research and it has been an enriching experience to work with Bofan – such a creative and talented composer. The pieces provided me with a unique window to see how AI is interpreted by others who are not in the field, in novel and interesting ways. The reconfiguration of performance gestures was fascinating and made me focus more on how AI is perceived, interpreted and understood by the general public. The idea that there needs to be much better communication and education of AI has led to a number of new avenues, one of which is work on Place Based Ethical AI in Greater Manchester, which was undertaken with Policy Connect and the All-Party Parliamentary Group on Data Analytics (Report Launch October 2020). I believe Bofan and I have a huge journey ahead of us – there are so many more new ideas and spaces to be explored and I am really looking forward to it!